Something wonderful just happened with Qualified Immunity

It’s also a call to action The post Something wonderful just happened with Qualified Immunity appeared first on Downsize DC.

It’s also a call to action The post Something wonderful just happened with Qualified Immunity appeared first on Downsize DC.

To make large language models (LLMs) more accurate when answering harder questions, researchers can let the model spend more time thinking about potential solutions. But common approaches that give LLMs this capability set a fixed computational budget for every problem, regardless of how complex it is. This means the LLM might waste computational resources on simpler questions or be unable to tackle intricate problems that require more reasoning. To address this, MIT researchers developed a smarter way to allocate […]

Google is testing AI-powered article overviews on participating publications’ Google News pages as part of a new pilot program, the search giant announced on Wednesday. News publishers participating in the pilot program include Der Spiegel, El País, Folha, Infobae, Kompas, The Guardian, The Times of India, The Washington Examiner, and The Washington Post, among others. […]

Learn how OpenAI’s new certifications and AI Foundations courses help people build real-world AI skills, boost career opportunities, and prepare for the future of work.

Based on insights from more than 100 builders, executives, investors, advisors, and researchers from across the globe.

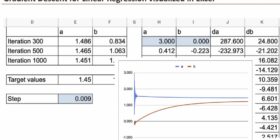

Linear Regression looks simple, but it introduces the core ideas of modern machine learning: loss functions, optimization, gradients, scaling, and interpretation. In this article, we rebuild Linear Regression in Excel, compare the closed-form solution with Gradient Descent, and see how the coefficients evolve step by step. This foundation naturally leads to regularization, kernels, classification, and the dual view. Linear Regression is not just a straight line, but the starting point for many models we will explore next in […]

Large language models (LLMs) sometimes learn the wrong lessons, according to an MIT study. Rather than answering a query based on domain knowledge, an LLM could respond by leveraging grammatical patterns it learned during training. This can cause a model to fail unexpectedly when deployed on new tasks. The researchers found that models can mistakenly link certain sentence patterns to specific topics, so an LLM might give a convincing answer by recognizing familiar phrasing instead of understanding the […]

As AI models grow in complexity and hardware evolves to meet the demand, the software layer connecting the two must also adapt. We recently sat down with Stephen Jones, a Distinguished Engineer at NVIDIA and one of the original architects of CUDA. Jones, whose background spans from fluid mechanics to aerospace engineering, offered deep insights into NVIDIA’s latest software innovations, including the shift toward tile-based programming, the introduction of “Green Contexts,” and how AI is rewriting the rules […]

Large language models generate text, not structured data.

A timeline of ChatGPT product updates and releases, starting with the latest, which we’ve been updating throughout the year.