India proposes charging OpenAI, Google for training AI on copyrighted content

India has given OpenAI, Google, and other AI firms 30 days to respond to its proposed royalty system for training on copyrighted content.

India has given OpenAI, Google, and other AI firms 30 days to respond to its proposed royalty system for training on copyrighted content.

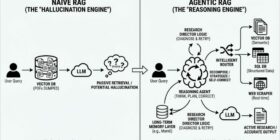

What if you could build a secure, scalable RAG+LLM system – no GPU, no latency, no hallucinations? In this session, Vincent Granville shares how to engineer high-performance, agentic multi-LLMs from scratch using Python. Learn how to rethink everything from token chunking to sub-LLM selection to create AI systems that are explainable, efficient, and designed for enterprise-scale applications. What you’ll learn: How to build LLM systems without deep neural nets or GPUs Real-time fine-tuning, self-tuning, and context-aware retrieval Best […]

Home Table of Contents KV Cache Optimization via Tensor Product Attention Challenges with Grouped Query and Multi-Head Latent Attention Multi-Head Attention (MHA) Grouped Query Attention (GQA) Multi-Head Latent Attention (MLA) Tensor Product Attention (TPA) TPA: Tensor Decomposition of Q, K, V Latent Factor Maps and Efficient Implementation Attention Computation and RoPE Integration KV Caching and Memory Reduction with TPA PyTorch Implementation of Tensor Product Attention (TPA) Tensor Product Attention with KV Caching Transformer Block Inferencing Code Experimentation Summary […]

Author(s): Utkarsh Mittal Originally published on Towards AI. Introduction XGBoost (Extreme Gradient Boosting) has become the go-to algorithm for winning machine learning competitions and solving real-world prediction problems. But what makes it so powerful? In this comprehensive tutorial, we’ll unpack the mathematical foundations and practical mechanisms that make XGBoost superior to traditional gradient boosting methods. This tutorial assumes you have basic knowledge of decision trees and machine learning concepts. We’ll walk through the algorithm step-by-step with visual examples […]

Joe Navarro is a former FBI agent and one of the world’s leading experts in body language and nonverbal communication. In this Moment, Joe reveals the hidden signals behind body language and how to use nonverbal cues, such as posture and eye contact, to your advantage in business, relationships, and beyond. Listen to the full episode with Joe Navarro on The Diary of a CEO below: Spotify: https://g2ul0.app.link/01Qhc2kbPYb Apple: https://g2ul0.app.link/NwkCj5obPYb Watch the Episodes On YouTube:https://www.youtube.com/c/%20TheDiaryOfACEO/videos Joe Navarro: https://www.jnforensics.com/

Author(s): AI Rabbit Originally published on Towards AI. Agentic Era If your architecture still looks like “User Query Vector DB LLM,” you aren’t building an AI application; you’re building a hallucination engine. The “naive” RAG era where we just dumped PDFs into Pinecone and prayed for the best is officially over. Here is the technical reality of RAG in 2025.The article discusses the evolution of Retrieval-Augmented Generation (RAG) pipelines, emphasizing the transition from outdated linear architectures to more […]

Ayn Rand described Thanksgiving as “a typically American holiday . . . its essential, secular meaning is a celebration of successful production. It is a producers’ holiday. The lavish meal is a symbol of the fact that abundant consumption is the result and reward of production.”

Why is nap better that any other ethical system? submitted by /u/unholy_anarchist [link] [comments]

In this article, I discuss the main problems of standard LLMs (OpenAI and the likes), and how the new generation of LLMs addresses these issues. The focus is on Enterprise LLMs. LLMs with Billions of Parameters Most of the LLMs still fall in that category. The first ones (ChatGPT) appeared around 2022, though Bert is an early precursor. Most recent books discussing LLMs still define them as transformer architecture with deep neural networks (DNNs), costly training, and reliance […]