Giving Real Meaning to Veterans Day

Any element of self-sacrifice in war is a betrayal of our soldiers and the American freedom they fight for.

Any element of self-sacrifice in war is a betrayal of our soldiers and the American freedom they fight for.

Clark Packard Last week, Disney+ released a restored version of The Beatles Anthology, the landmark 1995 ABC eight-part documentary series that remains the closest thing to a definitive account of the band’s extraordinary journey. I binge-watched the updated Anthology over the Thanksgiving holiday weekend, which brought back memories of watching the original with my parents 30 years ago. Now I’m the parent, though my wife and four-year-old son were less enthusiastic about the marathon viewing sessions. Last year, I […]

Standard LLMs rely on prompt engineering to fix problems (hallucinations, poor response, missing information) that come from issues in the backend architecture. If the backend (corpus processing) is properly built from the ground up, it is possible to offer a full, comprehensive answer to a meaningful prompt, without the need for multiple prompts, rewording your query, having to go through a chat session, or prompt engineering. In this article, I explain how to do it, focusing on enterprise […]

Environmental scientists are increasingly using enormous artificial intelligence models to make predictions about changes in weather and climate, but a new study by MIT researchers shows that bigger models are not always better. The team demonstrates that, in certain climate scenarios, much simpler, physics-based models can generate more accurate predictions than state-of-the-art deep-learning models. Their analysis also reveals that a benchmarking technique commonly used to evaluate machine-learning techniques for climate predictions can be distorted by natural variations in […]

Goldman Sachs has led Harness’s Series E round, with participation from IVP, Menlo Ventures, and Unusual Ventures.

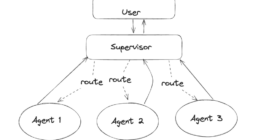

Understanding how LLM agents transfer control to each other in a multi-agent system with LangGraph The post How Agent Handoffs Work in Multi-Agent Systems appeared first on Towards Data Science.

Unveiling what it describes as the most capable model series yet for professional knowledge work, OpenAI launched GPT-5.2 today. The model was trained and deployed on NVIDIA infrastructure, including NVIDIA Hopper and GB200 NVL72 systems. It’s the latest example of how leading AI builders train and deploy at scale on NVIDIA’s full-stack AI infrastructure. Pretraining: The Bedrock of Intelligence AI models are getting more capable thanks to three scaling laws: pretraining, post-training and test-time scaling. Reasoning models, which […]

Ernest Opoku knew he wanted to become a scientist when he was a little boy. But his school in Dadease, a small town in Ghana, offered no elective science courses — so Opoku created one for himself. Even though they had neither a dedicated science classroom nor a lab, Opoku convinced his principal to bring in someone to teach him and five other friends he had convinced to join him. With just a chalkboard and some imagination, they […]

What I’ve learned about making Pandas faster after too many slow notebooks and frozen sessions The post 7 Pandas Performance Tricks Every Data Scientist Should Know appeared first on Towards Data Science.

Can a 3B model deliver 30B class reasoning by fixing the training recipe instead of scaling parameters? Nanbeige LLM Lab at Boss Zhipin has released Nanbeige4-3B, a 3B parameter small language model family trained with an unusually heavy emphasis on data quality, curriculum scheduling, distillation, and reinforcement learning. The research team ships 2 primary checkpoints, Nanbeige4-3B-Base and Nanbeige4-3B-Thinking, and evaluates the reasoning tuned model against Qwen3 checkpoints from 4B up to 32B parameters. https://arxiv.org/pdf/2512.06266 Benchmark results On AIME […]