The Complete Guide to Using Pydantic for Validating LLM Outputs

Large language models generate text, not structured data.

Large language models generate text, not structured data.

I frequently refer to OpenAI and the likes as LLM 1.0, by contrast to our xLLM architecture that I present as LLM 2.0. Over time, I received a lot of questions. Here I address the main differentiators. First, xLLM is a no-Blackbox, secure, auditable, double-distilled agentic LLM/RAG for trustworthy Enterprise AI, using 10,000 fewer (multi-)tokens, no vector database but Python-native, fast nested hashes in its original version, and no transformer to generate the structured output to a prompt. […]

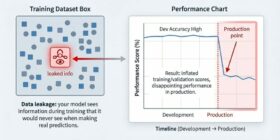

Data leakage is an often accidental problem that may happen in machine learning modeling.

During the first days of this Machine Learning Advent Calendar, we explored models based on distances. Today, we switch to a completely different way of learning: Decision Trees. With a simple one-feature dataset, we can see how a tree chooses its first split. The idea is always the same: if humans can guess the split visually, then we can rebuild the logic step by step in Excel. By listing all possible split values and computing the MSE for […]

Today, we are celebrating the extraordinary impact of Nobel Prize-winner Geoffrey Hinton by investing in the future of the field he helped build. Google is proud to supp…

Ceramics — the humble mix of earth, fire and artistry — have been part of a global conversation for millennia. From Tang Dynasty trade routes to Renaissance palaces, from museum vitrines to high-stakes auction floors, they’ve carried culture across borders, evolving into status symbols, commodities and pieces of contested history. Their value has been shaped by aesthetics and economics, empire and, now, technology. This figure visualizes 20 representative Chinese ceramic craftsmanship styles across seven historical periods, ranging from […]

French AI startup Mistral today launched Devstral 2, a new generation of its AI model designed for coding, as the company seeks to catch up to bigger AI labs like Anthropic and other coding-focused LLMs.

From idea to impact : using AI as your accelerating copilot The post How to Develop AI-Powered Solutions, Accelerated by AI appeared first on Towards Data Science.

Say a person takes their French Bulldog, Bowser, to the dog park. Identifying Bowser as he plays among the other canines is easy for the dog-owner to do while onsite. But if someone wants to use a generative AI model like GPT-5 to monitor their pet while they are at work, the model could fail at this basic task. Vision-language models like GPT-5 often excel at recognizing general objects, like a dog, but they perform poorly at locating […]

Machine-learning models can speed up the discovery of new materials by making predictions and suggesting experiments. But most models today only consider a few specific types of data or variables. Compare that with human scientists, who work in a collaborative environment and consider experimental results, the broader scientific literature, imaging and structural analysis, personal experience or intuition, and input from colleagues and peer reviewers. Now, MIT researchers have developed a method for optimizing materials recipes and planning experiments […]