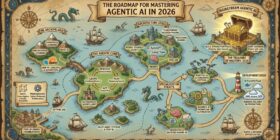

The Roadmap for Mastering Agentic AI in 2026

Agentic AI is changing how we interact with machines.

Agentic AI is changing how we interact with machines.

Technology is supercharging the attack on democracy and EFF is fighting back. We’re suing to stop government surveillance. We’re fighting to protect free expression online. And we’re building tools to protect your data privacy. Help support our mission with new gear from EFF’s online store, perfect gifts for the digital rights defender in your life. Take 20% your order today with code BLACKFRI. Thanks for being an EFF supporter! Liquid Core Dice are perfect for tabletop games. The metal […]

There is growing attention on the links between artificial intelligence and increased energy demands. But while the power-hungry data centers being built to support AI could potentially stress electricity grids, increase customer prices and service interruptions, and generally slow the transition to clean energy, the use of artificial intelligence can also help the energy transition. For example, use of AI is reducing energy consumption and associated emissions in buildings, transportation, and industrial processes. In addition, AI is helping […]

Key Highlights: After a successful launch of the Gemini 3 Pro-powered Nano Banana Pro, Google appears to be gearing up for the launch of Nano Banana 2 Flash. The upcoming image generation model will likely be powered by the Gemini 3 Flash model. According to code references, the new model is internally called “Mayo.” Nano Banana 2 Flash to come with almost similar image generation power as the “Pro” Citing details shared by early testers of Nano Banana […]

EFF has submitted its formal comment to the U.S. Patent and Trademark Office (USPTO) opposing a set of proposed rules that would sharply restrict the public’s ability to challenge wrongly granted patents. These rules would make inter partes review (IPR)—the main tool Congress created to fix improperly granted patents—unavailable in most of the situations where it’s needed most. If adopted, they would give patent trolls exactly what they want: a way to keep questionable patents alive and out […]

Home Table of Contents KV Cache Optimization via Tensor Product Attention Challenges with Grouped Query and Multi-Head Latent Attention Multi-Head Attention (MHA) Grouped Query Attention (GQA) Multi-Head Latent Attention (MLA) Tensor Product Attention (TPA) TPA: Tensor Decomposition of Q, K, V Latent Factor Maps and Efficient Implementation Attention Computation and RoPE Integration KV Caching and Memory Reduction with TPA PyTorch Implementation of Tensor Product Attention (TPA) Tensor Product Attention with KV Caching Transformer Block Inferencing Code Experimentation Summary […]

Environmental scientists are increasingly using enormous artificial intelligence models to make predictions about changes in weather and climate, but a new study by MIT researchers shows that bigger models are not always better. The team demonstrates that, in certain climate scenarios, much simpler, physics-based models can generate more accurate predictions than state-of-the-art deep-learning models. Their analysis also reveals that a benchmarking technique commonly used to evaluate machine-learning techniques for climate predictions can be distorted by natural variations in […]

For more than a century, meteorologists have chased storms with chalkboards, equations, and now, supercomputers. But for all the progress, they still stumble over one deceptively simple ingredient: water vapor. Humidity is the invisible fuel for thunderstorms, flash floods, and hurricanes. It’s the difference between a passing sprinkle and a summer downpour that sends you sprinting for cover. And until now, satellites have struggled to capture it with the detail needed to warn us before skies crack open. […]

In this article, I discuss the main problems of standard LLMs (OpenAI and the likes), and how the new generation of LLMs addresses these issues. The focus is on Enterprise LLMs. LLMs with Billions of Parameters Most of the LLMs still fall in that category. The first ones (ChatGPT) appeared around 2022, though Bert is an early precursor. Most recent books discussing LLMs still define them as transformer architecture with deep neural networks (DNNs), costly training, and reliance […]