WW-PGD: Projected Gradient Descent optimizer

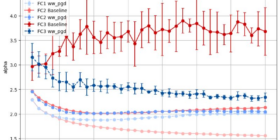

Announcing: 𝗪𝗪-𝗣𝗚𝗗 — 𝗪𝗲𝗶𝗴𝗵𝘁𝗪𝗮𝘁𝗰𝗵𝗲𝗿 𝗣𝗿𝗼𝗷𝗲𝗰𝘁𝗲𝗱 𝗚𝗿𝗮𝗱𝗶𝗲𝗻𝘁 𝗗𝗲𝘀𝗰𝗲𝗻𝘁 I just released WW-PGD, a small PyTorch add-on that wraps standard optimizers (SGD, Adam, AdamW, etc.) and applies an epoch-boundary spectral projection using WeightWatcher diagnostics. Elevator pitch: WW-PGD explicitly nudges each layer toward the Exact Renormalization Group (ERG) critical manifold during training. 𝗧𝗵𝗲𝗼𝗿𝘆 𝗶𝗻 𝘀𝗵𝗼𝗿𝘁 • HTSR critical condition: α ≈ 2 • SETOL ERG condition: trace-log(λ) over the spectral tail = 0 WW-PGD makes these explicit optimization targets, rather than […]