When Supply Chains Become Autonomous

A new simulation demonstrates how these might work—and how leadership will need to change to succeed with them.

A new simulation demonstrates how these might work—and how leadership will need to change to succeed with them.

London, UK – February 5-6, 2026 — The third edition of the Digital Assets Forum (DAF), organized by the European Blockchain Convention (EBC), will take place at Convene, 133 Houndsditch, in the heart of London’s financial district. After two sold-out, one-day editions, the forum now expands to two full days, reflecting accelerating institutional adoption and London’s strategic position as Europe’s capital markets hub for digital assets. DAF3 will gather leading figures from asset management, family offices, banks, hedge […]

Author(s): AIversity Originally published on Towards AI. Your weekly AI roundup: Big funding, new models, AWS agent launches, Tesla’s AI5 chip, EU AI Act updates, and what it means for builders and businesses. Keep reading for links, benchmarks, and takeaway This article is 100% free to read! Non-members can read for free by clicking “MY FRIEND LINK” here! Image by AuthorThis article discusses the significant developments in the AI space from the past week, highlighting major funding events, […]

Say a person takes their French Bulldog, Bowser, to the dog park. Identifying Bowser as he plays among the other canines is easy for the dog-owner to do while onsite. But if someone wants to use a generative AI model like GPT-5 to monitor their pet while they are at work, the model could fail at this basic task. Vision-language models like GPT-5 often excel at recognizing general objects, like a dog, but they perform poorly at locating […]

Home Table of Contents KV Cache Optimization via Tensor Product Attention Challenges with Grouped Query and Multi-Head Latent Attention Multi-Head Attention (MHA) Grouped Query Attention (GQA) Multi-Head Latent Attention (MLA) Tensor Product Attention (TPA) TPA: Tensor Decomposition of Q, K, V Latent Factor Maps and Efficient Implementation Attention Computation and RoPE Integration KV Caching and Memory Reduction with TPA PyTorch Implementation of Tensor Product Attention (TPA) Tensor Product Attention with KV Caching Transformer Block Inferencing Code Experimentation Summary […]

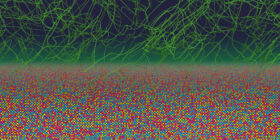

Jina AI has released Jina-VLM, a 2.4B parameter vision language model that targets multilingual visual question answering and document understanding on constrained hardware. The model couples a SigLIP2 vision encoder with a Qwen3 language backbone and uses an attention pooling connector to reduce visual tokens while preserving spatial structure. Among open 2B scale VLMs, it reaches state of the art results on multilingual benchmarks such as MMMB and Multilingual MMBench. https://arxiv.org/pdf/2512.04032 Architecture, overlapping tiles with attention pooling connector […]

Every year, global health experts are faced with a high-stakes decision: Which influenza strains should go into the next seasonal vaccine? The choice must be made months in advance, long before flu season even begins, and it can often feel like a race against the clock. If the selected strains match those that circulate, the vaccine will likely be highly effective. But if the prediction is off, protection can drop significantly, leading to (potentially preventable) illness and strain […]

Ceramics — the humble mix of earth, fire and artistry — have been part of a global conversation for millennia. From Tang Dynasty trade routes to Renaissance palaces, from museum vitrines to high-stakes auction floors, they’ve carried culture across borders, evolving into status symbols, commodities and pieces of contested history. Their value has been shaped by aesthetics and economics, empire and, now, technology. This figure visualizes 20 representative Chinese ceramic craftsmanship styles across seven historical periods, ranging from […]

This one little trick can bring about enhanced training stability, the use of larger learning rates and improved scaling properties The post NeurIPS 2025 Best Paper Review: Qwen’s Systematic Exploration of Attention Gating appeared first on Towards Data Science.

For decades, it’s been known that subtle chemical patterns exist in metal alloys, but researchers thought they were too minor to matter — or that they got erased during manufacturing. However, recent studies have shown that in laboratory settings, these patterns can change a metal’s properties, including its mechanical strength, durability, heat capacity, radiation tolerance, and more. Now, researchers at MIT have found that these chemical patterns also exist in conventionally manufactured metals. The surprising finding revealed a […]