How to Maximize Agentic Memory for Continual Learning

Learn how to become an effective engineer with continual learning LLMs The post How to Maximize Agentic Memory for Continual Learning appeared first on Towards Data Science.

Learn how to become an effective engineer with continual learning LLMs The post How to Maximize Agentic Memory for Continual Learning appeared first on Towards Data Science.

Empromptu claims all a user has to do is tell the platform’s AI chatbot what they want — like a new HTML or JavaScript app — and the AI will go ahead and build it.

Even obvious solutions are hard to execute The post Politicians make the case for Downsize DC appeared first on Downsize DC.

An HBR Executive Masterclass with Amy Gallo.

You’ll now get more creative control in Flow with new refinement and editing capabilities.

An overview of Google’s latest funding announcement for computer science education and the newest AI Quest.

Machine-learning models can speed up the discovery of new materials by making predictions and suggesting experiments. But most models today only consider a few specific types of data or variables. Compare that with human scientists, who work in a collaborative environment and consider experimental results, the broader scientific literature, imaging and structural analysis, personal experience or intuition, and input from colleagues and peer reviewers. Now, MIT researchers have developed a method for optimizing materials recipes and planning experiments […]

In part 2 of our two-part series on generative artificial intelligence’s environmental impacts, MIT News explores some of the ways experts are working to reduce the technology’s carbon footprint. The energy demands of generative AI are expected to continue increasing dramatically over the next decade. For instance, an April 2025 report from the International Energy Agency predicts that the global electricity demand from data centers, which house the computing infrastructure to train and deploy AI models, will more than double by […]

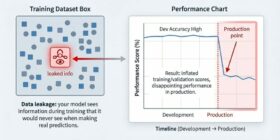

Data leakage is an often accidental problem that may happen in machine learning modeling.

An overview, summary, and position of cutting-edge research conducted on the emergent topic of LLM introspection on self internal states