Improved Gemini audio models for powerful voice experiences

Post Content

Discover how Podium used OpenAI’s GPT-5 to build “Jerry,” an AI teammate driving 300% growth and transforming how Main Street businesses serve customers.

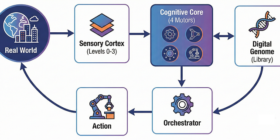

Author(s): Carlos Eduardo Favini Originally published on Towards AI. By Carlos Eduardo Favini Industrial AI — Image by Author 1. The Semantic Ceiling: Why Industrial AI Stalls After two decades of investment, roughly 70% of digital transformation initiatives fail to scale beyond pilot stages (McKinsey, 2023). Industry 4.0 delivered connectivity — sensors, networks, data lakes — but not cognition. The result: dashboards that monitor but don’t decide, models that predict but don’t understand, and automation that breaks when […]

Nebius Token Factory customers use distillation today for search ranking, grammar correction, summarization, chat quality improvement, code refinement, and dozens of other narrow tasks.

An overview of Google’s latest funding announcement for computer science education and the newest AI Quest.

Learn how to make a newsletter with AI tools The post How to Create an ML-Focused Newsletter appeared first on Towards Data Science.

Scout24 has created a GPT-5 powered conversational assistant that reimagines real-estate search, guiding users with clarifying questions, summaries, and tailored listing recommendations.

The playlists can factor in world knowledge, go back to your listening history from day one, and be refreshed daily or weekly.

The following press release was issued today by the Broad Institute of MIT and Harvard. Although outbreaks of Ebola virus are rare, the disease is severe and often fatal, with few treatment options. Rather than targeting the virus itself, one promising therapeutic approach would be to interrupt proteins in the human host cell that the virus relies upon. However, finding those regulators of viral infection using existing methods has been difficult and is especially challenging for the most […]

To make large language models (LLMs) more accurate when answering harder questions, researchers can let the model spend more time thinking about potential solutions. But common approaches that give LLMs this capability set a fixed computational budget for every problem, regardless of how complex it is. This means the LLM might waste computational resources on simpler questions or be unable to tackle intricate problems that require more reasoning. To address this, MIT researchers developed a smarter way to allocate […]