The Journey of a Token: What Really Happens Inside a Transformer

Large language models (LLMs) are based on the transformer architecture, a complex deep neural network whose input is a sequence of token embeddings.

Large language models (LLMs) are based on the transformer architecture, a complex deep neural network whose input is a sequence of token embeddings.

Using machine learning, MIT chemical engineers have created a computational model that can predict how well any given molecule will dissolve in an organic solvent — a key step in the synthesis of nearly any pharmaceutical. This type of prediction could make it much easier to develop new ways to produce drugs and other useful molecules. The new model, which predicts how much of a solute will dissolve in a particular solvent, should help chemists to choose the […]

Live session with Vincent Granville, Chief AI Architect and Co-founder at BondingAI. Scaling databases is a tricky balance. Teams need speed and reliability, but costs keep rising. From runaway infrastructure bills to overprovisioned clusters and slow queries, companies often spend more without seeing better performance. Join for a practical session on how to reduce database total cost of ownership (TCO) without sacrificing performance. Vincent will share strategies that leading organizations are using to control costs, optimize systems, and […]

One of the shared, fundamental goals of most chemistry researchers is the need to predict a molecule’s properties, such as its boiling or melting point. Once researchers can pinpoint that prediction, they’re able to move forward with their work yielding discoveries that lead to medicines, materials, and more. Historically, however, the traditional methods of unveiling these predictions are associated with a significant cost — expending time and wear and tear on equipment, in addition to funds. Enter a […]

In this tutorial, we explore hierarchical Bayesian regression with NumPyro and walk through the entire workflow in a structured manner. We start by generating synthetic data, then we define a probabilistic model that captures both global patterns and group-level variations. Through each snippet, we set up inference using NUTS, analyze posterior distributions, and perform posterior predictive checks to understand how well our model captures the underlying structure. By approaching the tutorial step by step, we build an intuitive […]

What comes after Transformers? Google Research is proposing a new way to give sequence models usable long term memory with Titans and MIRAS, while keeping training parallel and inference close to linear. Titans is a concrete architecture that adds a deep neural memory to a Transformer style backbone. MIRAS is a general framework that views most modern sequence models as instances of online optimization over an associative memory. Why Titans and MIRAS? Standard Transformers use attention over a […]

The playlists can factor in world knowledge, go back to your listening history from day one, and be refreshed daily or weekly.

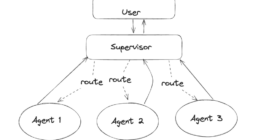

Understanding how LLM agents transfer control to each other in a multi-agent system with LangGraph The post How Agent Handoffs Work in Multi-Agent Systems appeared first on Towards Data Science.